算力网络

算力网络硬件配置

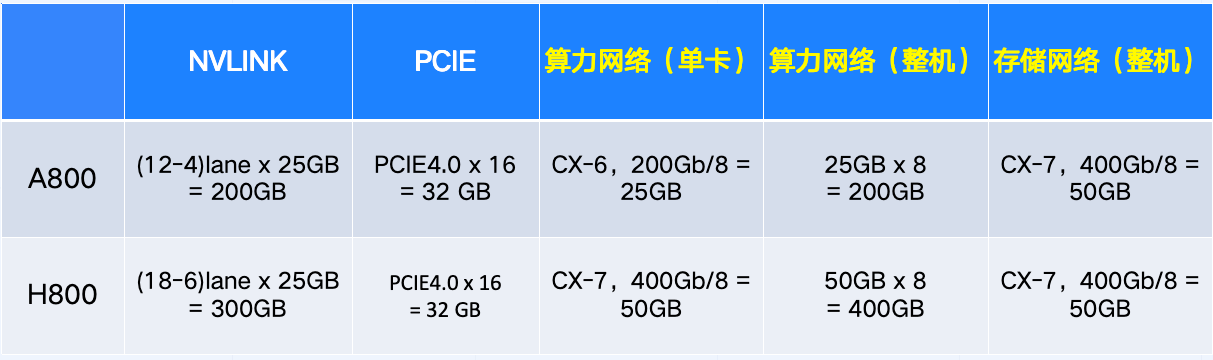

英博云的H800、A800机器,配置有专门的算力网络,具体的配置如下:

HCA命名规范

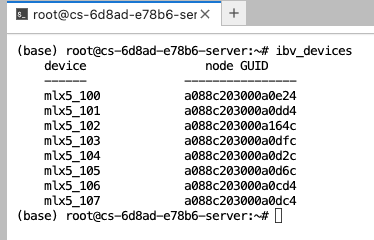

英博云的H800、A800机器,具备8张HCA网卡,具体命名为:

mlx5_100

mlx5_101

mlx5_102

mlx5_103

mlx5_104

mlx5_105

mlx5_106

mlx5_107

在开发机中,可以用ibv_devices命令查看,如下:

在k8s工作负载中引用算力网络

若要引用算力网络,需要在资源中声明:

rdma/hca_shared_devices_ib: 1

以下是基于kubeflow的MPIJob运行2机16卡nccl测试的例子,这里启用了2个worker,每个worker引用8张A800 GPU卡,8张算力网卡;launcher采用普通的CPU节点。

注意:

- 关于具体的节点类型与实例规格配置,参考这里

---

apiVersion: kubeflow.org/v1

kind: MPIJob

metadata:

name: nccl-test-slot8-worker2

spec:

slotsPerWorker: 8

cleanPodPolicy: Running

mpiReplicaSpecs:

Launcher:

replicas: 1

template:

spec:

hostNetwork: true

hostPID: false

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: cloud.ebtech.com/cpu # 指定节点类型,这里为CPU节点

operator: In

values:

- amd-epyc-milan

containers:

- image: registry-cn-beijing2-internal.ebtech-inc.com/ebsys/pytorch:2.5.1-cuda12.2-python3.10-ubuntu22.04-v09

name: mpi-launcher

command: ["/bin/bash", "-c"]

args: [

"sleep 10 && \

mpirun \

-np 8 \

--allow-run-as-root \

-bind-to none \

-x LD_LIBRARY_PATH \

-x NCCL_IB_DISABLE=0 \

-x NCCL_IB_HCA=mlx5_100,mlx5_101,mlx5_102,mlx5_103,mlx5_104,mlx5_105,mlx5_106,mlx5_107 \

-x NCCL_SOCKET_IFNAME=bond0 \

-x NCCL_ALGO=RING \

-x NCCL_DEBUG=INFO \

-x SHARP_COLL_ENABLE_PCI_RELAXED_ORDERING=1 \

-x NCCL_COLLNET_ENABLE=0 \

/opt/nccl-tests/build/all_reduce_perf -b 1G -e 8G -f 2 -g 1 #-n 200 #-w 2 -n 20

",

]

resources: # 指定实例规格,1core 2GB

limits:

cpu: "1"

memory: "2Gi"

Worker:

replicas: 2

template:

spec:

hostNetwork: true

hostPID: false

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: cloud.ebtech.com/gpu # 指定节点类型,这里为GPU-H800节点

operator: In

values:

- H800_NVLINK_80GB

volumes:

- emptyDir:

medium: Memory

name: dshm

containers:

- image: registry-cn-beijing2-internal.ebtech-inc.com/ebsys/pytorch:2.5.1-cuda12.2-python3.10-ubuntu22.04-v09

name: mpi-worker

command: ["/bin/bash", "-c"]

volumeMounts:

- mountPath: /dev/shm

name: dshm

securityContext:

capabilities:

add:

- IPC_LOCK

args:

- |

echo "Starting sleep infinity..."

sleep infinity

resources:

limits:

nvidia.com/gpu: 8 # 指定实例规格,8卡机器

rdma/hca_shared_devices_ib: 8 # 8张HCA网卡